Perplexity’s ranking algorithm works through a structured chain of decisions.

- When a user asks a question, the system breaks it down into intent,

- retrieves possible answers,

- tests each for quality and trust,

- aligns those results with live user behavior, and

- shows the final citations.

Every page that is cited has passed through several checkpoints: semantic relevance, accessibility, authority, engagement, and freshness. Each of these steps is controlled by specific parameters inside Perplexity’s backend.

This breakdown explains that process in full. You’ll see HOW the system works, so you can align your content with what Perplexity already favors.

TL:DR

- Perplexity doesn’t crawl the whole web itself. It reformulates your query, calls Bing’s Search API, and filters those results through its own infrastructure.

- Intent Mapping classifies each query by topic and purpose. High-value subjects (AI, tech, science) get boosted visibility, while low-priority ones are suppressed.

- Retrieval merges Bing’s index with Perplexity’s internal cache. Only pages that are crawlable, renderable, and semantically relevant enter the candidate pool.

- Assessment (L3 Reranker) uses an XGBoost model to score that pool for semantic depth, trust, freshness, and engagement.

embedding_similarity_threshold decides if content is even relevant enough to stay.- embedding_similarity_threshold decides if content is even relevant enough to stay.

- Manual whitelists (GitHub, Stack Overflow, etc.) and memory networks favor authoritative, interconnected sources.

- Early engagement (new_post_ctr) gets you in; historic engagement (historic_engagement_v1) helps you stay.

- Signal Alignment double-checks results against real-world signals – YouTube trends, multimodal assets (tables, code, ALT-text), and engagement metrics (discover_engagement_7d).

- Final Selection trims to just a few high-confidence answers. Pages with dislikes or low click-throughs (dislike_filter_limit, discover_no_click_7d_batch_embedding) quietly disappear.

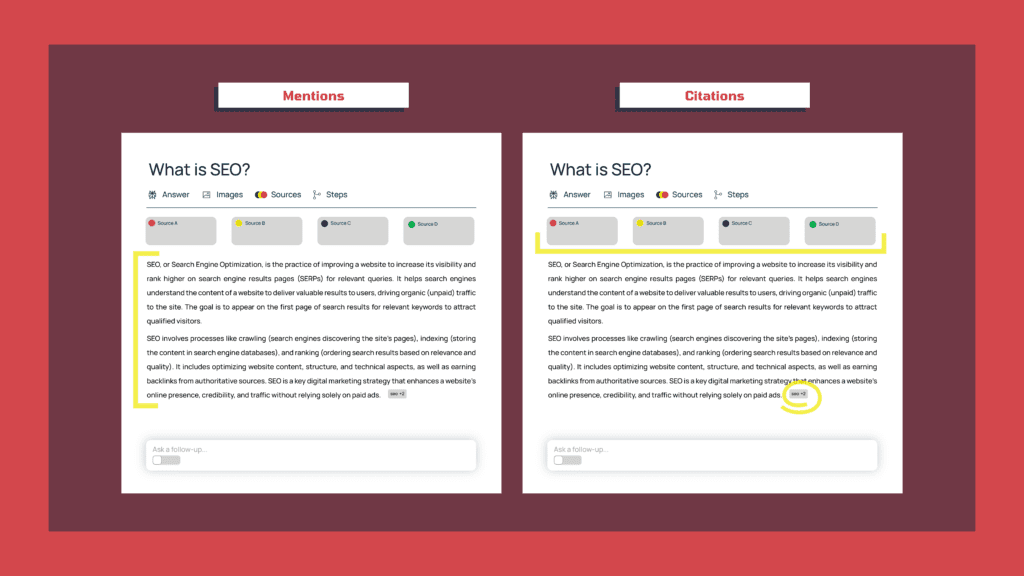

What “Getting Mentioned” in Perplexity Actually Means

When we say “mentions” in Perplexity, there are two very different outcomes, and both matter in different ways.

A mention is when your brand or product name shows up in the body of an answer in plain text. It signals that the system recognizes you as relevant to the topic, and it builds background authority.

A citation is when Perplexity links directly to your page as one of its sources. These show up as visible references under the answer, with your domain attached. For a user, they can see where the information came from, and they have a one-click path to you if they want more.

While brand mentions strengthen your credibility, it’s citations that make it easiest for users to engage with your brand.

The strategies in this guide are about giving you the best shot at both, because the more you’re mentioned, the more credible you look, and the more often you’re cited, the faster those mentions start turning into revenue.

How Perplexity Works Behind the Scenes

We’ve broken this down based on ranking parameters that have surfaced through Perplexity’s own request logs. It showed us things like how new posts are judged, how quality thresholds are applied, and even which topic categories get boosts or penalties.

Pulling those signals together gives us a clearer picture of the stages content moves through before it ever shows up in an answer.

Intent Mapping

The very first thing Perplexity does is figure out what kind of query it’s dealing with and where to route it. To do so, the system classifies the query’s topic and builds its semantic vector, then it routes that vector to the right index (trending vs evergreen).

Layer 1: Topic and Semantic Context Initialization

In this layer, the query is semantically understood and ranked for importance before it is categorized as “evergreen” or “trending”.

Perplexity starts by encoding the user query using its internal embedding model (text_embedding_v1). This converts the natural-language input into a dense vector so it can be compared across topics.

Next, it applies parameters such as subscribed_topic_multiplier and top_topic_multiplier tell the system which subjects deserve preferential exposure, while restricted_topics sharply down-weights low-priority areas like entertainment or sports.

| Parameter Category | Key Parameters | Purpose / Impact | Example |

| Topic Classification | subscribed_topic_multiplier, top_topic_multiplier, default_topic_multiplier, restricted_topics | Assigns visibility weight by category. High-value topics (AI, Tech, Science) gain exponential reach; restricted topics are suppressed. | An “AI Prompt Engineering Guide” receives far higher baseline exposure than “Celebrity AI Filters.” |

| Semantic Relevance Init. | text_embedding_v1 | Embeds the query into vector space for downstream matching. | “How does Perplexity rank answers?” yields tighter embeddings than “Perplexity ranking???” |

Layer 2: Query Routing and Deduplication

Once the system knows what the query means, it decides where it should live.

The logs show parameters like trending_news_index_name and trending_news_minimum_should_match. That’s the system watching search activity in real time, and once enough people pile onto a topic, it becomes a part of the “trending” index.

-> To become a part of trending queries, speed, freshness, and synchronisation with live events are everything, because that index is threshold-driven and volatile.

On the other side, you’ve got suggested_index_name and suggested_num_per_cluster, which control evergreen clusters – these are groups of related questions that Perplexity treats as long-term categories (for example, queries like “best project management tools for startups,” and “ClickUp vs Asana comparison”).

-> To become one of the sources that Perplexity pulls to feed its evergreen bucket, you have to EXHAUST a topic cluster (pillars, comparisons, how-tos) and create each guide that tells exactly what it is from its title, headings, FAQs, etc.

| Parameter Category | Key Parameters | Purpose / Impact | Example |

| Trending Detection | trending_news_index_name, trending_news_minimum_should_match | Tracks real-time interest; promotes topics crossing engagement thresholds. | A sudden surge for “OpenAI pricing update” pushes it into trending results. |

| Evergreen Clustering | suggested_index_name, suggested_num_per_cluster | Builds long-term topic clusters for recurring searches. | A complete set of “SEO for SaaS” guides keeps surfacing in evergreen suggestions. |

| Deduplication | fuzzy_dedup_threshold, fuzzy_dedup_enabled | Consolidates similar queries to strengthen signal. | “Perplexity ranking system” and “How Perplexity ranks content” collapse into one canonical query. |

Cracking Perplexity Rankings

Perplexity isn’t a black box. Learn how it ranks content and how we support SaaS teams building visibility in AI answers.

Retrieval

Once Perplexity understands what the user is asking, it moves into retrieval. This is the stage where it actually goes out to collect information that could answer the query.

The process begins with Bing.

Perplexity doesn’t crawl the entire web itself from scratch each time you ask something, instead, it piggybacks off of Bing’s massive search infrastructure through the Bing Web Search API, which acts like a live bridge to the internet.

Here’s how that exchange plays out.

When you type a question into Perplexity, the system reformulates it – translates your natural language into a structured REST query (a JSON request) that Bing can interpret. Bing then returns an initial bundle of results including snippets, titles, and URLs ranked by relevance. At this point, Perplexity takes those Bing-supplied links and starts validating them one by one – using PerplexityBot.

THIS is where technical SEO choices make or break your visibility.

For instance, if your site’s robots.txt blocks BingPreview, or if a page hides its main content behind heavy JavaScript, Perplexity can’t actually read it. And that leads to the page getting quietly dropped before it ever reaches the ranking pipeline.

The pages that pass this validation, meaning they can be fetched, rendered, and parsed cleanly, are added to Perplexity’s retrieval pool. That’s the internal shortlist of eligible documents from which the model will later choose its citations and synthesize answers.

Next, the Union Retrieval System (parameter: enable_union_retrieval) comes into play. Here, Perplexity merges Bing’s index with its own in-house cache of previously fetched and indexed pages.

Then calculate_matching_scores kicks in as an early semantic check. It compares the query embedding to each document’s vector and trims out anything obviously off-topic before the heavier ranking model steps in.

Finally, enable_ranking_model flags that this pool will be handed over to the machine-learning system (the L3 Reranker) once retrieval ends.

Alongside these core systems, feed parameters keep the intake balanced.

feed_retrieval_limit_topic_match caps how many results can come from a single topical feed (eg, all AI tool reviews or all GitHub pages), and persistent_feed_limit does the same at the domain level. Without them, one site or subject could flood the candidate pool and distort later scoring.

In a nutshell, the retrieval stage acts like a controlled intake system:

- Bing opens the gate, Perplexity filters for accessibility and semantic fit, merges in its own index, and enforces diversity before anything reaches the reranker.

- By the time retrieval finishes, only pages that are crawlable, contextually relevant, and balanced across topics remain in play, forming the vetted pool from which the real ranking work begins.

| System Layer | Key Parameters | Function / Impact on Ranking | Example |

| Core Retrieval Infrastructure | enable_union_retrieval | Merges Bing’s web index with Perplexity’s own cached pages to form a unified retrieval pool. | A page previously fetched by Perplexity can reappear even if Bing doesn’t return it in the latest query. |

| Semantic Matching Layer | calculate_matching_scores | Performs an early embedding-based similarity check to remove off-topic documents before reranking. | A page loosely related to the query’s keywords but semantically irrelevant is filtered out. |

| Ranking Pipeline Trigger | enable_ranking_model | Flags that the vetted retrieval pool will be passed to the L3 reranker for deeper evaluation. | Once retrieval finishes, these pages advance to the assessment stage for scoring. |

| Feed Distribution Controls | feed_retrieval_limit_topic_match, persistent_feed_limit | Restrict overrepresentation from the same feed or topic during retrieval to maintain diversity. | Prevents 10 similar “AI tools” pages from one blog from filling the entire candidate pool. |

| Crawl & Accessibility Validation | (PerplexityBot + BingPreview behavior) | Ensures each page is fetchable, renderable, and readable; inaccessible or blocked pages are dropped. | A site blocking BingPreview in robots.txt won’t make it into Perplexity’s pool. |

Assessment

After the retrieval, Perplexity runs everything through its L3 reranker – a machine-learning quality filter designed to decide what to keep from the current pool.

Here, parameters such as l3_reranker_drop_threshold and l3_reranker_drop_all_docs_if_count_less_equal act as quality and volume safeguards.

As identified in Metehan Yesilyurt’s 2025 analysis, they remove documents that fall below Perplexity’s internal quality score and can even discard an entire result set if too few high-quality pages remain. (Exact threshold values aren’t publicly documented, but browser-level traces confirm this behavior.)

In addition to this, there are multiple other parameters that tell us how sources and content within them are assessed inside Perplexity for their inclusion eligibility in the final response.

First comes semantic relevance.

Using the parameter embedding_similarity_threshold, the system measures how close each document’s embedding vector is to the reformulated query vector. This threshold acts as a minimum similarity score. So, if a page’s semantic alignment score falls below that numeric cutoff, the reranker discards it entirely. That means only documents whose content meaning sits above that similarity threshold (not just sharing keywords) continue forward for quality and trust evaluation.

Next is trust and contextual continuity, modeled through memory networks.

Parameters such as boost_page_with_memory, memory_limit, and related_pages_limit reward sources that demonstrate consistent coverage across related topics. If your site has multiple connected guides around the same entity or theme (as we said earlier, CLUSTERS WIN!), those internal references amplify each other. They tell the AI a signal that you have a very strong expertise, and you’ve built it very intentionally.

Then comes authority balancing.

Perplexity’s reranker uses both algorithmic and manual trust inputs. Manual whitelists include high-confidence developer and documentation domains like GitHub, Stack Overflow, Codecademy, and Notion – they inject a baseline authority multiplier.

To ensure balance, blender_web_link_domain_limit and blender_web_link_percentage_threshold prevent any single domain (even an authoritative one) from monopolizing an answer. But still, it’s safe to say that having connections with these ecosystems can give you an inherent authority edge.

And “connections”, can mean several things here:

- You’ve published content on one of these platforms (like a GitHub README or tutorial).

- Your product or company is referenced within them (for example, discussed in a Stack Overflow thread or open-source repo).

- You’ve linked to or cited these authoritative sources within your own content.

Each of these connections signals credibility because the reranker can trace the association.

Freshness also weighs in here.

Through parameters like time_decay_rate and item_time_range_hours, Perplexity gradually lowers the weight of older pages. Even the best-written content loses visibility if it looks outdated. The easiest way to stay in rotation is to keep the page active, add new data points, refresh examples, and update your timestamps whenever you make meaningful edits. Those signals help Perplexity recognize that the content has been maintained and should be re-evaluated for relevance.

Content diversity is another layer of quality control.

Parameters like diversity_hashtag_similarity_threshold and hashtag_match_threshold help Perplexity avoid surfacing multiple pages that say the same thing in slightly different ways. The reranker looks for repetitive language patterns or overlapping topical tags and keeps only the strongest or most distinct versions.

On top of all this, parameters like new_post_impression_threshold, new_post_ctr, and new_post_published_time_threshold_minutes determine whether a freshly published page earns enough external traction, impressions, clicks, and attention to even enter the ranking pool. Pages that get early visibility and engagement are seen as high-interest and are far more likely to be included in Perplexity’s reranker pipeline in the first place.

However, short-term traction isn’t the whole story. The reranker also considers historical performance patterns through parameters like historic_engagement_v1, and this feature can counterbalance weaker early signals by factoring in a domain’s or author’s prior engagement strength.

ALL OF THIS tells us that Perplexity doesn’t judge a page by what it says, but by how it connects, how recent it is, and whether the system can trust it to represent reality without bias or redundancy.

| Factor Category | Key Parameters | Function / Impact on Ranking | Example |

| Quality Gate (L3 Reranker) | l3_reranker_drop_threshold, l3_reranker_drop_all_docs_if_count_less_equal | Define minimum content quality and viable document count. Pages below the quality threshold or from too-small result sets are dropped entirely. | A thin article might match the topic but still be discarded if it scores below the model’s confidence cutoff. |

| Semantic Relevance | embedding_similarity_threshold | Measures semantic closeness between a document and the reformulated query. Anything below the similarity score is excluded. | A post with matching keywords but weak conceptual overlap fails the semantic test. |

| Context & Continuity (Memory Networks) | boost_page_with_memory, memory_limit, related_pages_limit | Rewards domains that demonstrate consistent topical coverage and internal linkage. | A SaaS site with a full “LLM SEO” cluster ranks stronger than a single, isolated post. |

| Authority & Domain Balance | Manual whitelists, blender_web_link_domain_limit, blender_web_link_percentage_threshold | Combines manual trust boosts (GitHub, Stack Overflow, etc.) with algorithmic diversity controls to prevent one domain from dominating. | A Stack Overflow-cited article benefits from trust weighting but won’t crowd out other sources. |

| Freshness & Recency | time_decay_rate, item_time_range_hours | Gradually reduces visibility of outdated pages; refreshed content regains weight through updated timestamps or new data. | A 2023 “Perplexity ranking” post updated with 2025 data stays competitive. |

| Content Diversity | diversity_hashtag_similarity_threshold, hashtag_match_threshold | Removes near-duplicate or repetitive pages within the same topic cluster. Keeps one strong representative per idea. | Among multiple “how Perplexity ranks” guides, only the most distinct version remains. |

| Early Engagement (Inclusion Signals) | new_post_impression_threshold, new_post_ctr, new_post_published_time_threshold_minutes | Evaluates external performance of newly published pages – impressions and CTR determine eligibility for inclusion in the reranker. | A new post gaining early clicks across the web is more likely to enter Perplexity’s ranking pool. |

| Historical Engagement (Trust Memory) | historic_engagement_v1 | Acts as a counterweight to short-term underperformance by factoring in past engagement strength and reliability. | A domain with consistent prior traction can still rank even if a new post’s launch metrics are modest. |

Signal Alignment Stage

Before Perplexity finalizes its answer set, it looks outward for supporting signals, this is a kind of double-checking step to confirm that the content it’s about to cite is genuinely relevant and in demand.

Cross-Platform Signals

One of the clearest signals comes from YouTube.

Metehan’s parameter mapping revealed direct references like trending_news_minimum_should_match, showing that trending queries inside Perplexity often sync with YouTube search and watch patterns. When a video title or description exactly matches a query that’s gaining traction within Perplexity, that video, and sometimes its linked page, receives a measurable visibility boost.

Multimodal Checks

Inside the Perplexity’s backend configuration, there were multimodal embedding references. These are the parts of the system that check for structured non-text inputs like tables, diagrams, or code snippets. This tells us that you need to have every visual asset machine-readable (like adding ALT text to images, and structured markups like<table>. Doing that contributes to the page’s matching score against the query.

| Signal Category | Key Parameters | Function / Impact on Ranking | Example |

| Cross-Platform Correlation | trending_news_minimum_should_match | Detects alignment between Perplexity’s trending queries and external platforms (e.g., YouTube). Boosts visibility for topics validated across ecosystems. | A YouTube video and a web article sharing the same trending title both rise in Perplexity’s rankings. |

| Multimodal Checks | Multimodal embedding references, <table> / ALT / schema signals | Incorporates structured visuals and code blocks into similarity scoring, improving rank for machine-readable pages. | A tutorial with code snippets and diagrams ranks higher than a plain-text version. |

Final Selection

At the last stage, Perplexity narrows to just a handful of high-confidence answers.

For example, the parameter dislike_filter_limit shows up here. That’s the system drawing a hard line on how many negative reactions like dislikes, skips, “no-click” behaviors, a page can accumulate before it gets suppressed. It means even a well-optimized article can quietly disappear from answers if users keep bouncing.

Similarly, parameters like discover_no_click_7d_batch_embedding tell us that Perplexity is running rolling checks over a 7-day window. If your content is consistently pulled into retrievals but ignored by users, the algorithm learns not to surface it again. This tells us that to stay in the pool, your pieces have to continually engage users.

| Signal Category | Key Parameters | Function / Impact on Ranking | Example |

| Negative Feedback Filters | dislike_filter_limit | Sets a hard cap on how many negative signals (dislikes, skips, short dwell times) a document can receive before being suppressed. | A blog post that users repeatedly skip or downvote is dropped from future answer sets. |

| Rolling Engagement Checks | discover_no_click_7d_batch_embedding | Tracks user interactions over a 7-day window; if a page is consistently surfaced but ignored, it’s removed from active ranking. | A link that keeps appearing in results but never earns clicks gets excluded from future pulls. |

End-to-End Summary of How Perplexity’s Ranking Pipeline Works

| Stage | Core Function | Key Parameters | What It Decides / Impacts | Example in Action |

| 1. Intent Mapping | Understands query meaning, classifies topic, and routes to correct index (trending vs evergreen). | text_embedding_v1, subscribed_topic_multiplier, top_topic_multiplier, restricted_topics, trending_news_index_name, suggested_index_name, fuzzy_dedup_threshold | Determines how queries are interpreted, categorized, and consolidated; sets the foundation for relevance and exposure. | A surge in searches for “OpenAI pricing update” crosses the trending threshold and moves into Perplexity’s real-time index. |

| 2. Retrieval | Gathers potential answers from Bing and Perplexity’s own cache, validates accessibility, and enforces diversity. | enable_union_retrieval, calculate_matching_scores, enable_ranking_model, feed_retrieval_limit_topic_match, persistent_feed_limit | Builds the eligible content pool – only crawlable, contextually relevant, and well-structured pages survive to the next stage. | A site blocking BingPreview in robots.txt fails validation and never enters Perplexity’s retrieval pool. |

| 3. Assessment (L3 Reranker) | Applies machine learning scoring to filter by semantic, trust, and engagement signals. | l3_reranker_drop_threshold, embedding_similarity_threshold, boost_page_with_memory, blender_web_link_domain_limit, time_decay_rate, new_post_ctr, historic_engagement_v1 | Decides which pages “survive” based on semantic match, authority, freshness, and engagement patterns. | A newer post with modest CTR survives reranking because its domain has strong historic engagement. |

| 4. Signal Alignment | Cross-checks candidate content against real-world and multimodal signals. | trending_news_minimum_should_match, multimodal embedding refs, discover_engagement_7d, historic_engagement_v1, time_decay_rate | Confirms that the content aligns with active demand, user behavior, and structured richness. | A YouTube video and article sharing the same trending title both gain a ranking boost. |

| 5. Final Selection | Chooses the final, high-confidence sources to cite and keeps them updated based on engagement. | dislike_filter_limit, discover_no_click_7d_batch_embedding | Filters out low-engagement or disliked pages and finalizes the visible answer set. | A frequently skipped article is dropped from future Perplexity answers within a week. |

Final Thoughts: How Perplexity’s Ranking Algorithm Works

Knowing which signals Perplexity listens to and which levers you can influence is very important to understand now because more people are using AI to research products and make decisions. If your content isn’t built to satisfy those systems, you’ll miss out, no matter how good you are at SEO.

By reverse engineering Perplexity’s ranking logic, we uncovered practical, real-world tactics you can start using:

- Make your content semantically deep so it aligns meaningfully with query embeddings

- Build topic clusters and internal links across related pages

- Use structured visuals, alt text, and machine-readable markup

- Launch content with engagement in mind (early traction matters)

- Keep your content fresh and iteratively improved

Those are the same levers you’ll see play out in our companion piece – How to Get Mentioned in Perplexity (Strategies that Actually Work) – where we translate these mechanics into practical steps you can start applying today.

Cracking Perplexity Rankings

Perplexity isn’t a black box. Learn how it ranks content and how we support SaaS teams building visibility in AI answers.