As a founder allowing an LLM crawler on your site creates opportunity and risk. The risk is that it might surface content and information you don’t want it to, or even worse, information about your users. The opportunity however, is ever When AI tools reference your brand in their answers, that’s marketing. But when they summarize your content so thoroughly that users don’t need to visit your site, you lose eyeballs on your site.

Beyond that, these crawlers might feed your documentation, knowledge base, or pricing details into systems that competitors use. And let’s not forget: aggressive crawling can significantly increase your server load.

Your best approach is straightforward: make deliberate choices about allowing, limiting, or blocking these crawlers based on what matters to your business: revenue, customer acquisition, and risk management.

What are LLM crawlers?

LLM crawlers are bots used by AI companies to access your content. You’re noticing them in your server logs because AI search tools have gone mainstream, and companies are competing fiercely to deliver better results.

LLM crawlers differ from traditional search crawlers (like Googlebot) in both purpose and behavior. While search crawlers index pages to rank them later, LLM crawler traffic aims to train models, fetch live answers, or operate as an agent in real time. This difference in intentions alters their crawl cadence, impact on your funnel, and the rules they abide by.

There are three primary LLM crawler types:

- Training Scrapers: These bots gather content to enrich the AI model’s knowledge base for training purposes. Once the content is used for training, it becomes part of the AI’s knowledge, even if you later update or remove the original page..

- Live-retrieval Fetchers: These bots scour the web in real time to gather content for immediate use in responses to user queries. Unlike training scrapers, they do not permanently store your content in a model’s knowledge base. Their visits are temporary, generating short-term server requests rather than continuous scraping..

Agentic Crawlers: Function like headless browsers, mimicking user behavior by clicking, scrolling, filling out forms, or running workflows on your site. They can interact with parts of your application that maintain information about a user’s actions over time, such as login sessions, shopping carts, or personalized dashboards. Because of this, they pose higher risks than simple scrapers if not managed carefully.

Understanding these three types of LLM crawlers and their respective intentions is fundamental for devising appropriate access policies that cater to each bot’s behavior and potential impact on your website’s performance.

How LLM crawlers work

LLM crawlers navigate your content by:

- Following backlinks from other websites,

- Read your XML sitemaps

- Structured references,

- Access publicly available URLs, and by

- Shared links in user queries.

Once they discover your content, LLM crawlers do more than just identify it for ranking, they process it differently from search engine crawlers, by breaking your content down into machine-readable signals that later fuel answers. As highlighted by Oomph, the process involves several stages:

- Crawling – Bots start by visiting URLs and downloading page content. Some bots, like Googlebot, execute JavaScript, while most other LLM crawlers work with only raw HTML and skip heavy rendering (for now).

- Chunking – The collected content is split into smaller, manageable units such as paragraphs or sections. Clear headings and consistent formatting help the system segment content effectively for later use.

- Vectorization – Each chunk is converted into numerical vectors that capture its semantic meaning. These vectors allow the system to quickly match content with user queries, and their quality depends on the clarity of the text.

- Indexing – Metadata like URLs, page titles, and schema markup is stored alongside vectors. This structured information is essential for filtering, prioritizing, and ranking relevant content.

Retrieval – When you ask a question, the system finds the most relevant pieces of information (or, chunks), organizes them (reranks them), and then creates complete answers, often showing where the information came from (citations).

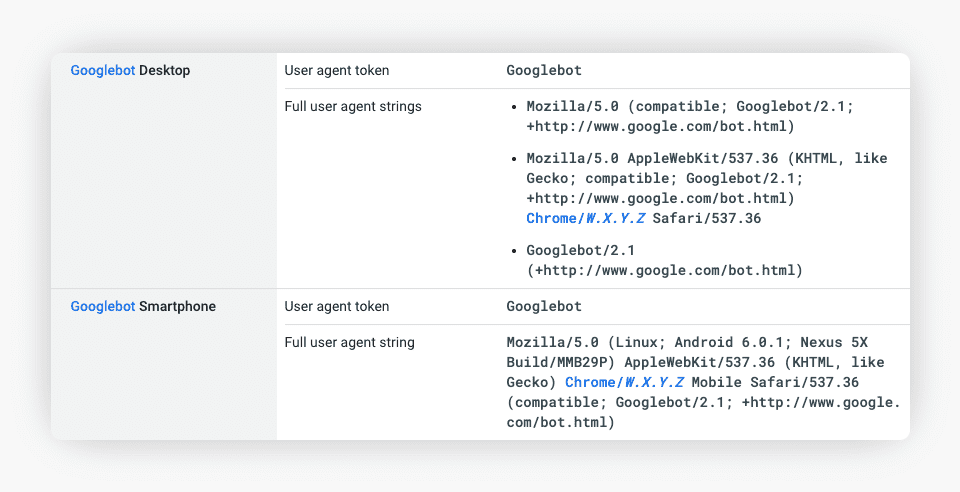

When visiting your site, these bots typically announce themselves through User-Agent strings, for instance this string indicates that the request is coming from Googlebot, Google’s web crawler:

Source: Justia Onward

Most well-known AI crawlers are considered legitimate because they come from public companies you know (Google, ChatGPT etc), they clearly identify themselves, follow site rules, and originate from verifiable IP addresses published by their providers. However, some malicious bots try to mimic these trusted crawlers by copying their User-Agent strings. Cloudflare announced in July 2024 a feature that enables all customers to block all AI bots, scrapers, and crawlers with a single click. This move responds to growing concerns over AI scraping, which can replicate content without permission, potentially impacting creators, publishers, and SaaS companies alike. The feature gives direct control over AI access to your digital assets, enabling you to protect content and data from unauthorized AI access.

To protect websites from unauthorized AI scraping and other malicious bots, you can use a multi-layered defense strategy. This includes adding instructions in the site’s metadata to guide crawlers, requiring logins for sensitive pages, maintaining lists of approved IP addresses, limiting how many requests a visitor can make in a short time, and using web application firewalls to detect and block suspicious behavior.

Get Noticed By LLMs

AI crawlers are scanning the web, but are they seeing your content? We can help make sure your content actually gets picked up and highlighted.

The LLM crawlers you’ll most likely see

Knowing which crawlers are accessing your site helps you manage content visibility, enforce opt-outs, and understand traffic patterns. This knowledge helps you optimize your website’s performance and also ensures that your content is being used in ways that align with your intentions.

| Bot / Agent | Purpose / Description |

| GPTBot | Collects data for model training. So, block it if you don’t want your content included in training sets. |

| ChatGPT-User | Fetches live pages when a URL from your site is referenced inside ChatGPT. |

| OAI-SearchBot | Crawls pages to power OpenAI’s search-like features. |

| PerplexityBot | Indexes sites to help generate AI-powered search results and summarized answers within Perplexity’s platform. These summaries often cite sources, which can increase your brand’s visibility, though clicks aren’t guaranteed. |

| Perplexity-User | Fetches live pages in real time when a user’s query directly calls for them, even if your site has opted out of general crawling. |

| GoogleOther | Is a generic crawler Google uses for non-search purposes, such as internal research and product development. It may fetch publicly available content but does not affect Google Search indexing or rankings.. |

| Google-Extended | Lets you control whether your content can be used to train future generations of Google’s Gemini models or provide grounding data (using Google Search index content to improve accuracy). Blocking Google-Extended does not affect your site’s visibility or ranking in Google Search. |

| Anthropic | Anthropic’s ClaudeBot and similar bots may appear with different verification methods. Always check reverse DNS or published IPs before trusting the traffic. |

| Amazonbot | Crawls content for Amazon AI and retail ecosystem services. |

| CCBot | Operated by Common Crawl, this bot collects publicly available content to contribute to multiple AI datasets. |

| PetalBot | Huawei’s web crawler that indexes content for Petal Search and AI services. |

| Bytespider | Operated by ByteDance, collects content for AI datasets and internal discovery tools. |

| YoudaoBot | NetEase’s bot that crawls sites to support AI search and educational content. |

| BingChatBot | Microsoft’s bot that collects content to improve responses in Bing Chat and other AI features. |

| NeevaBot | Collects content for AI-powered search results and summarization. |

| Meta-ExternalAgent | Meta’s bot that crawls for content discovery and indexing to support AI and search features. |

| AppleBot | Apple’s bot that crawls content for Siri and Spotlight search features. |

| CohereBot | Collects data to train and improve Cohere’s language models. |

| Diffbot | Provides structured data from web pages for AI training and other applications. |

| YaK | Yandex’s bot that crawls content for Yandex search engine and AI services. |

| TollBit | Monitors and analyzes AI bot traffic for website management and monetization. |

| 80legs | Offers web crawling services for data collection, including AI datasets. |

| Oxylabs | Provides web data collection and proxy infrastructure for AI and analytics use. |

How to Decide What to Allow or Block

Deciding whether to allow LLM crawlers access to your SaaS content isn’t a one-size-fits-all choice. The right approach depends on the type of content you host, your industry, and regulatory considerations.

Some content, like product pages, feature lists, and integrations, can benefit from AI discoverability and may drive new users to your site. Other content, especially sensitive information such as customer data, support documentation, or internal strategy materials, could pose legal, privacy, or compliance risks if incorporated into AI datasets.

The key is to balance visibility with protection. By assessing your goals, content sensitivity, and regulatory obligations, you can make informed decisions that maximize AI-driven discovery while safeguarding sensitive information.Below is a step-by-step framework to guide SaaS founders in deciding the best way to go.

SaaS Founder’s Framework: Should You Allow or Block LLM Crawlers?

Step 1 – Define Your Goal

Question: Do you want your SaaS to be discoverable in AI answers (features, integrations, positioning)?

- Yes → Go to Step 2

- No → BLOCK

KPI: Brand mentions in LLMs, new top-funnel discovery traffic from product/features pages

Step 2 – Revenue Sensitivity

Question: Would AI summaries replace key conversion steps (onboarding, pricing calls, playbooks)?

- Yes → BLOCK sensitive sections; Allow product/feature content

- No → Go to Step 3

KPI: Trial/demo signups preserved, onboarding/consulting revenue protected

Step 3 – Legal & Compliance Risk

Question: Do you host regulated or confidential data (health, finance, contracts, customer info, screenshots)?

- Yes → BLOCK those sections

- No → Go to Step 4

KPI: Zero sensitive info exposure in AI outputs, clean compliance reports (GDPR, HIPAA, NDAs)

Step 4 – Final Decision

- Allow All

- If: Goal is visibility + No revenue cannibalization + No compliance risks

- KPI: Increase in LLM mentions, demo/trial signups from AI discovery

- Block All

- If: Goal is protection + Conversions at risk OR high compliance exposure

- KPI: Trials steady, zero info leaks, onboarding revenue protected

- Mixed (Default for SaaS)

- Allow: Marketing pages, product features, integrations

- Block: Pricing sheets, onboarding docs, strategy playbooks, sensitive support content

- KPI: Balanced visibility + protection; LLM-driven discovery without conversion drop or leaks

Step 5 – Monitor & Adjust

Re-evaluate quarterly:

- If Visibility KPIs improve with no revenue harm → continue allowing

- If Conversion KPIs decline → expand blocking

- If Legal KPIs show risk → tighten restrictions immediately

Bottom Line

LLM crawlers are here to stay, and their impact on your SaaS business can be significant. By understanding the types of bots accessing your site, the risks they pose, and using a structured framework to guide access decisions, you can balance visibility, compliance, and user trust effectively.

Take a proactive approach: decide deliberately, implement technical controls, and monitor results regularly to ensure AI discovery works for your business.For expert guidance on managing AI-driven visibility and protecting your content while maximizing SaaS growth, Singularity Digital can help you stay ahead in the AI era.

Get Noticed By LLMs

AI crawlers are scanning the web, but are they seeing your content? We can help make sure your content actually gets picked up and highlighted.